CIS 375 SOFTWARE ENGINEERING

UNIVERSITY OF MICHIGAN-DEARBORN

DR. BRUCE MAXIM, INSTRUCTOR

Date: 9/29/97

Week 4

SOFTWARE QUALITY:

ASSESS QUALITY:

- Correctness;

- Maintainability;

- Mean Time To Change (MTTC).

- Spoilage = (cost of change / total cost of system).

- Integrity = [1 - threat * (1 - security)];

- Threat = probability of attack (something that

causes failure).

- Security = probability attack is repelled.

- Usability =

- Time to learn system.

- Time required to become proficient.

- Net productivity increase.

- Subjective attitude survey.

- DRE - defect removal efficiency;

- DRE = E / (E + D).

- E = the # of errors found before delivery.

- D = the # of errors found after delivery.

QUALITY:

- Of Design - characteristics of product specified by designer.

- Of Conformance - degree to which specifications were followed

during manufacture.

QUALITY ASSURANCE:

TOTAL QUALITY MANAGEMENT:

(TQM)

- Develop visible and repeatable processes.

- Examine intangibles affecting processes and optimize their

impact.

- Concentrate on user's application of the product and improve

the process.

- Examine uses of product in the market place.

SOFTWARE QUALITY ASSURANCE:

- Software requirements conformance - is the basis for measuring

quality.

- Specified standards define developmental criteria and guide

software engineering process.

- There is an implicit set of requirements which must be met.

SOFTWARE QUALITY ASSURANCE GROUP:

Duties:

- Prepare SQA plan.

- Participate in developing project software description.

- Review engineering activities for process compliance.

- Audit software product for compliance.

- Ensure product deviations are well documented.

STRUCTURED WALKTHROUGH: (DESIGN

REVIEW)

Peer review of some product - found to eliminate 80% of all

errors if done properly.

WHY DO PEER REVIEW?

- To improve quality.

- Catches both coding errors and design errors.

- Enforce the spirit of any organization standards.

- Training and insurance.

FORMALITY TIMING:

Formal presentations - resemble conference presentations.

Informal presentations - less detailed, but equally

correct.

Early - informal (too early, may not be enough

information).

Later - formal (too late, may have a "bunch

of crap").

When?

~ After analysis.

~ After design.

~ After compilation (first).

~ After test run (first).

~ After all test runs.

ROLES IN WALKTHROUGH:

- Presenter (designer/producer).

- Coordinator (not person who hires/fires).

- Secretary (person to record events of meeting,

build paper trail).

- Reviewers

- Maintenance oracle.

- Standards bearer.

- User representative.

GUIDELINES FOR WALKTHROUGH:

- Keep it short (< 30 minutes).

- Don't schedule two in a row.

- Don't review product fragments.

- Use standards to avoid style disagreements.

- Let the coordinator run the meeting.

FORMAL APPROACHES TO SQA:

- Proof of correctness.

- Statistical quality assurance.

- Collect/categorize sample.

- If defects are found trace them back to the code

- Clean room process (combine #'s 1 & 2).

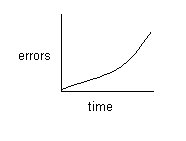

SOFTWARE RELIABILITY:

Measures:

MTBF - mean time between failures.

= MTTF (mean time to failure) + MTTR (mean time to repair).

Availability = MTTF / (MTTF + MTTR) = (MTTF / MTBF) * 100%.

RELIABILITY MODELS:

- Predicts reliability as a function of chronological

time.

VS

- Predicts reliability as a function of elapsed processor time.

RELIABILITY MODELS THAT ARE DERIVED FROM HARDWARE RELIABILITY

ASSUMPTIONS:

- Debugging time between errors has exponential

distribution - proportional to # of remaining errors.

- Each error is removed as it is discovered.

- Failure rate between errors is constant.

MODELS DERIVED FROM INTERNAL PROGRAM CHARACTERISTICS

SEEDING MODELS:

Take errors and arbitrarily place them into the

code, to see how long it takes to catch the seeded errors.

CRITERIA FOR EVALUATING MODELS:

- Predictive validity.

- Capability (produce useful data).

- Quality of assumptions.

- Applicability (can it be applied across domain

types).

- Simplicity.

Date: 10/1/97

Week 4

MAINTENANCE:

(Longest life cycle phase)

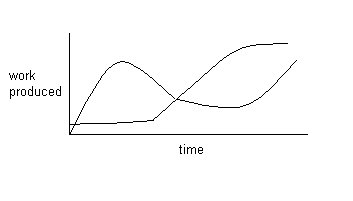

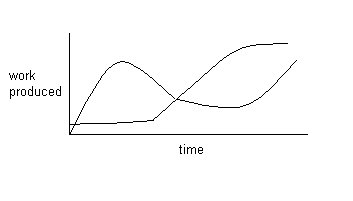

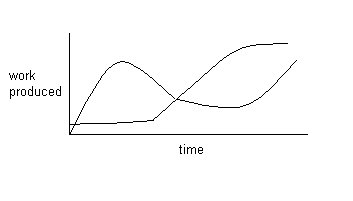

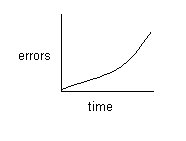

EVOLVING SYSTEM VS. DECLINING:

- High maintenance cost.

- Unacceptable reliability.

- System adaptable to change?

- Time to effect change.

- Unacceptable performance.

- System functions limited in usefulness.

- Other systems? (faster, cheaper).

- High cost to maintain hardware.

MAINTENANCE TEAM:

- Understand system.

- Locate information in documentation.

- Keep system documentation up to date.

- Extend existing functions.

- Add new functions.

- Find sources of errors.

- Correct system errors.

- Answer operations questions.

- Restructure design and code.

- Delete obsolete design and code.

- Manage changes.

TYPES OF MAINTENANCE:

- Corrective (21%) fixing errors in original product.

- Adaptive (25%) fixing errors created by fixing other errors.

- Perfective (50%) client needs changes.

- Preventative (4%) prevent new errors.

MAINTENANCE DIFFICULTY FUNCTIONS:

- Limited understanding of hardware and software (maintainer).

- Management priorities (maintenance is low priority).

- Technical problems.

- Testing difficulties (finding problems).

- Morale problems (maintenance is boring).

- Compromise (decision making problems).

MAINTENANCE COST FACTORS:

- Type supported applications.

- Staff turnover.

- System life span.

- Dependence on changing environments.

- Hardware characteristics.

- Quality of design.

- Quality of code.

- Quality of documentation.

- Quality of testing.

CONFIGURATION MANAGEMENT:

(Tracking changes)

CONFIGURATION MANAGEMENT TEAM:

- Analysts.

- Programmers.

- Program Librarian.

CONFIGURATION CONTROL BOARD:

(Change control board)

- Customer representatives.

- Some members of the Configuration management team.

PROCESS:

(Of changes)

- Problem is discovered.

- Problem is reported to configuration control board.

- The board discusses the problem (is the problem a failure

or an enhancement, who should pay for it)

- Assign the problem a priority or severity level, and assign

staff to fix it.

- Programmer or analyst locates the source of the problem, and

determines what is needed to fix it.

- Programmer works with the librarian to control the installation

of the changes in the operational system and the documentation.

- Programmer files a change report documenting all changes made.

ISSUES:

(Of change process)

- Synchronization (when?).

- Identification (who?).

- Naming (what?).

- Authentication (done correctly?).

- Authorization (who O.K.'d it?).

- Routing (who's informed?).

- Cancellation (who can stop it?).

- Delegation (responsibility issue).

- Valuation (priority issue).

AUTOMATED TOOLS FOR MAINTENANCE:

- Text editors (better than punch cards).

- File comparison tools.

- Compilers and linkage editors.

- Debugging tools.

- Cross reference generators.

- Complexity calculators.

- Control Libraries.

- Full life cycle CASE tools.

COMPUTER SYSTEM ENGINEERING:

- Software Engineering.

- Hardware Engineering.

COMPUTER SYSTEM ELEMENTS:

- Software.

- Hardware.

- People.

- Databases.

- Documentation.

- Procedures (more human type, not code).

COMPUTER SYSTEM ENGINEER/ANALYST TASKS:

- Transform customer defined goals and constraints into a system

representation describing:

- Function.

- Performance.

- Interfaces.

- Design constraints.

- Information structures.

- Bound the system and select the configuration using:

- Project schedule and costs.

- Business considerations.

- Technical analysis.

- Manufacturing evaluations.

- Human issues.

- Environmental interface.

- Legal considerations.

SOFTWARE ENGINEERING:

- Definition phase:

- Produce software project plan.

- Produce software requirements plan.

- Revised software project plan.

- Development phase:

- Deliver first design specifications.

- Module descriptions added to design specs after review.

- Coding after design is complete.

- Verification, release, and maintenance:

- Validation of source code.

- Test plan.

- Customer testing and acceptance.

- Maintenance.

HUMAN FACTORS AND HUMAN ENGINEERING:

(HCI - human computer interaction)

- Activity analysis (watch the people you're supporting).

- Semantic analysis and design (what & why

they do things).

- Semantic and lexical design (hw & sw implementation).

- User environment design (physical facilities

and HCI stuff).

- Prototyping.