CIS 375 SOFTWARE ENGINEERING

UNIVERSITY OF MICHIGAN-DEARBORN

DR. BRUCE MAXIM, INSTRUCTOR

Date: 11/24/97

Week 12

SOFTWARE RELIABILITY:

- Probability estimation.

- Reliability.

- R = MTBF / (1 + MTBF)

- 0 < R < 1

- Availability.

- A = MTBF / (MTBF + MTTR)

- Maintainability.

- M = 1 / (1 + MTTR)

- Software Maturity Index (SMI).

Mt = # of modules in current release.

Fc = # of changed modules.

Fa = # of modules added to previous release.

Fd = # of modules deleted from previous release.

SMI = [MT - (Fa + Fc + Fd)]

/ Mt

{As number of errors goes to 1 - software becomes more stable}

ESTIMATING # OF ERRORS:

- Error seeding.

(s / S) = (n / N)

- Two groups of independent testers.

E(1) = (x / n) = (# of read errors found / total # of real errors)=

q/y =

(# overlap/# found by 2)

E(2) = (y / n) = q/y = (# overlap / # found by 1)

n = q/(E(1) * E(2))

x = 25, y = 30, q = 15

E(1) = (15 / 30) = .5

E(2) = (15 / 25) = .6

n = [15 / (.5)(.6)] = 50 errors

CONFIDENCE IN SOFTWARE:

- S = # of seeded errors.

N = # of actual errors.

C (confidence level) = 1 if n > N

C (confidence level) = [S / (S - N + 1)] if n N

- Seeded errors found so far.

C = 1 if n > N

C = S / (S + N + 1)

S = # of seeded errors

s = # seeded errors found

N = # of actual errors

n = # of actual errors found so far

INTENSITY OF FAILURE:

- Suppose that intensity is proportional to # of faults or errors

at start of testing.

duty time

function A 90%

function B 10%

Suppose 100 total errors

50 in A, 50 in B

(.9)50K + (.1)50K = 50K

(.1)50K = 5K

or

(.9)50k = 45K

- Zero failure testing.

failures to time t = ae-b(t)

[(ln (failures / (0.5 + failures)) / (ln (0.5 + failures) / (test

+ failures))] * (test hours to last failure)

Example:

33,000 line program.

15 = errors found.

500 = # of hours total testing.

50 = # of hours since last failure.

if we need failure rate 0.03 / 1000 LOC

failure = (.03)(33) = 1

[(ln (1 / (0.5 + 1))) / (ln ((0.5 + 1) / 15) + 1)] * 450 = 77

CASE TOOLS:

- Analytic tools.

(used during software development)

- Which assist in development and maintenance of software.

UPPER CASE (FRONT-END TOOLS):

- requirements

- specification

- planning

- design

LOWER CASE:

- implementation

- integration

- maintenance

TAXONOMY OF CASE TOOLS:

- Information engineering tools.

- Process modeling and management tools.

- Project planning tools.

- Cost / effect estimation.

- Project scheduling tools.

- Risk analysis tools.

- Project management tools.

(Monitor project & maintain management plan)

- Requirements tracing tools.

- Metrics & management tools.

- Documentation tools.

- System software tools.

- Q.A. tools.

- Database management tools.

- Software configuration management tools.

- Analysis & design tools.

- PRO / SIM tools.

- Interface design tools.

- Prototyping tools.

- Programming tools.

(Compilers, editors, etc.)

- Integration & testing tools.

- Static analysis tools.

- Dynamic analysis tools.

- Test management tools.

- Client/server testing tools

- Reengineering tools

Date: 11/26/97

Week 12

"LIFE CYCLE" CASE:

I-CASE ENVIRONMENT:

- Mechanism for sharing SE information among tools.

- Tracking change.

- Version control & configuration management.

- Direct access to any tool.

- Automating the work breakdown that matches tools.

- Support communication among software engineers.

- Collect metrics & management.

INTEGRATION ARCHITECTURE:

- User interface layer.

- Tools management services (TSM).

- Object management layer.

- Shared responsibility layer (CASE database)

CASE REPOSITORY (DB) IN I-CASE:

Role:

- Data integrity.

- Information sharing.

- Data /tool integration.

- Data /data integration.

- Methodology enforcement.

- Document standardization.

Content:

- Problem to be solved.

- Problem domain.

- Emerging solution.

- Rules pertaining to software process methodology.

- Project plan.

- Organizational content.

DBMS:

- Non-redundant data storage.

- Data access at high level

- Data independent.

- Transaction control.

- Security.

- Ad hoc queries & reports.

- Openness (import/export).

- Multi-user support.

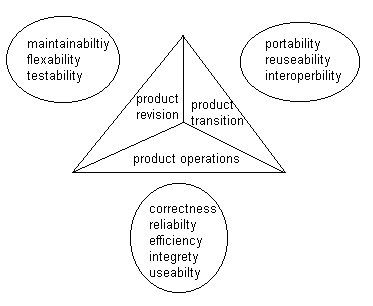

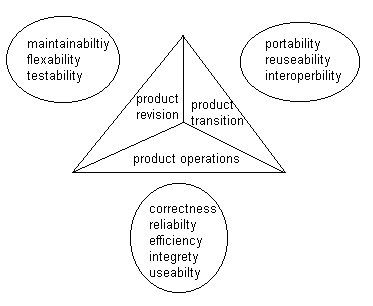

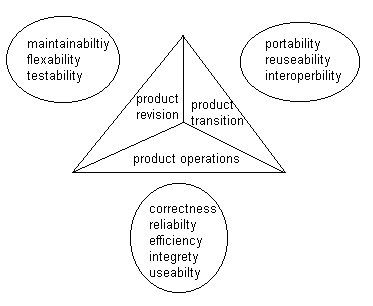

SOFTWARE QUALITY:

McCall's software quality functions (regression)

SOFTWARE QUALITY MEASUREMENT PRINCIPLES:

- Formulation.

- Collect information.

- Analysis information.

- Interpretation.

- Feedback.

ATTRIBUTES OF EFFECTIVE SOFTWARE METRICS:

- Simple and computable.

- Empirically & intuitively persuasive.

- Consistent & objective.

- Consistent in use of units & dimension.

- Programming language implementation.

- Provide mechanism for quality feedback.

SAMPLE METRICS:

- Function-based (function points).

- Bang metric (DeMarco).

- RE / FuP

- (< 0.7) function strong applications.

- (> 1.5) data strong applications.

SPECIFICATION QUALITY METRICS:

nn = nf + nnf

where:

- nn = requirements & specification

.

- nf = functional .

- nnf = non-functional .

Specificity, Q1 = nai/qr

- nai = # of requirements with reviewer

agreement.

Completeness, Q2 = nu / (ni *

ns)

- nu = unique functions.

- ni = # of inputs.

- ns = # of states.

Overall completeness, Q3 = nc / (nc

+ nnv)

- nc = # validated & correct.

- nnv = # not validated.

SOFTWARE QUALITY INDICES:

IEEE standard - SMI (Software Maturity Index)

- SMI = [Mt = (Fa + Fc

+ Fd)]/Mt

- Mt = number of modules in current release.

- Fa = modules added.

- Fc = modules changed.

- Fd = modules deleted.

COMPONENT LEVEL METRICS:

- Cohesion metric:

- Data slice.

- Data tokens.

- Glue tokens.

- Super glue tokens.

- Stickiness.

- Coupling metrics:

- For data & control flow coupling.

- For global coupling.

- For environmental coupling.

- Complexity metrics.

- Interface design metrics.