CIS 375 SOFTWARE ENGINEERING

University Of Michigan-Dearborn

Dr. Bruce Maxim, Instructor

System Design:

Characteristics Of Conceptual Design:

Technical Design Contents:

Design Approaches:

OR

Program Design:

Program Design Modules:

Program Design Guidelines:

vs.

(There are risks and benefits for both)

What Is A Module?

A set of contiguous program statements with:

(Self contained, sometimes can be compiled separately)

Modules either contain executable code or creates data.

Determining Program Complexity:

{More modules - less errors in each, but more errors between them}

Program Complexity Factors:

(too many lines of code between uses of a variable)

(too many lines of code in one module)

(too many "if - then - else", etc.)

(too much information to have to know)

(x,y -> x,y; not y1,y2)

(good presentation)

Software Reliability:

R = MTBF / (1 + MTBF)

0 < R < 1

A = MTBF / (MTBF + MTTR)

M = 1 / (1 + MTTR)

Mt = # of modules in current release.

Fc = # of changed modules.

Fa = # of modules added to previous release.

Fd = # of modules deleted from previous release.

SMI = [MT – (Fa + Fc + Fd)] / Mt

{As number of errors goes to 1 - software becomes more stable}

Estimating # Of Errors:

(s / S) = (n / N)

E(1) = (x / n) = (# of read errors found / total # of real errors)= q/y =

(# overlap/# found by 2)

E(2) = (y / n) = q/y = (# overlap / # found by 1)

n = q/(E(1) * E(2))

x = 25, y = 30, q = 15

E(1) = (15 / 30) = .5

E(2) = (15 / 25) = .6

n = [15 / (.5)(.6)] = 50 errors

confidence in software:

N = # of actual errors.

C (confidence level) = 1 if n > N

C (confidence level) = [S / (S – N + 1)] if n £ N

C = 1 if n > N

C = S / (S + N + 1)

S = # of seeded errors

s = # seeded errors found

N = # of actual errors

n = # of actual errors found so far

intensity of failure:

duty time

function A 90%

function B 10%

Suppose 100 total errors

50 in A, 50 in B

(.9)50K + (.1)50K = 50K

(.1)50K = 5K

or

(.9)50k = 45K

failures to time t = ae-b(t)

[(ln (failures / (0.5 + failures)) / (ln (0.5 + failures) / (test + failures))] * (test hours to last failure)

Example:

33,000 line program.

15 = errors found.

500 = # of hours total testing.

50 = # of hours since last failure.

if we need failure rate 0.03 / 1000 LOC

failure = (.03)(33) = 1

[(ln (1 / (0.5 + 1))) / (ln ((0.5 + 1) / 15) + 1)] * 450 = 77 Software Quality:

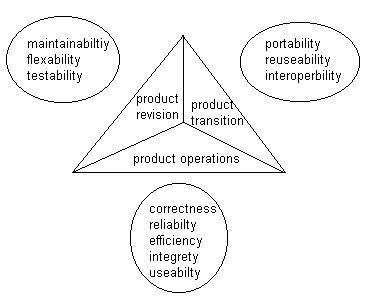

McCall's software quality functions (regression)

Software Quality Measurement Principles:

Attributes Of Effective Software Metrics:

Sample Metrics:

RE / FuP

(< 0.7) function strong applications.

(> 1.5) data strong applications.

Specification Quality Metrics:

nn = nf + nnf

where:

nn = requirements & specification .

nf = functional .

nnf = non-functional .

Specificity, Q1 = nai/qr

nai = # of requirements with reviewer agreement.

Completeness, Q2 = nu / (ni * ns)

nu = unique functions.

ni = # of inputs.

ns = # of states.

Overall completeness, Q3 = nc / (nc + nnv)

nc = # validated & correct.

nnv = # not validated.

Software Quality Indices:

IEEE standard - SMI (Software Maturity Index)

SMI = [Mt = (Fa + Fc + Fd)]/Mt

Mt = number of modules in current release.

Fa = modules added.

Fc = modules changed.

Fd = modules deleted.

Component Level Metrics:

Data slice.

Data tokens.

Glue tokens.

Super glue tokens.

Stickiness.

For data & control flow coupling.

For global coupling.

For environmental coupling.